HTTPS Access

This FAQ explains how to make your web applications (e.g. Gradio, FastAPI, Streamlit, Flask) publicly accessible on Trooper.AI GPU servers via HTTP(S). This is especially useful for dashboards, APIs, and user interfaces running inside your Blib or template.

🌐🔢 How many public ports do I get?

Every server on Trooper.AI comes with at least 10 public ports per GPU.

That means:

- A 1×GPU machine → 10 public ports

- A 4×GPU machine → 40 public ports

- A 8×GPU machine → 80 public ports

Most users only need 2–4 ports (e.g. one for their app, one for monitoring, one for WebSocket/API access).

You can check your available ports in the dashboard or request a full list from support.

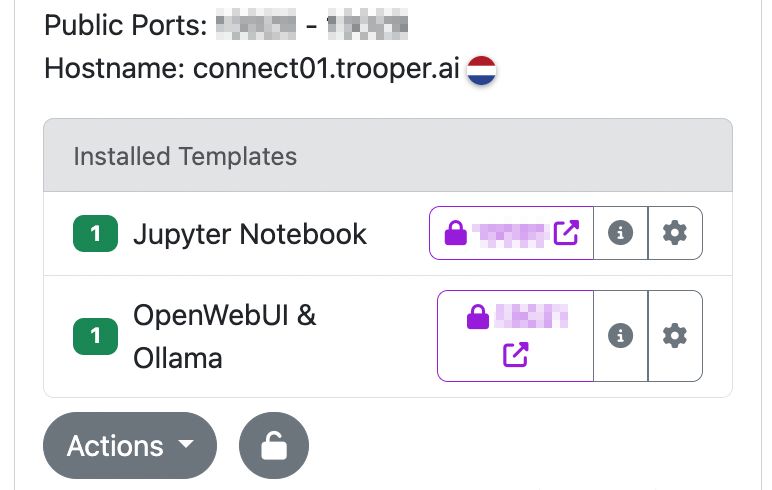

In this screenshot: From top to button and left to right you see:

- Public port range

- Public hostname

- Template names

- Secure lock icon meaning a secure HTTPS connection is possible (click on this to open the WebUI of the template). Read more about SSL HTTPS Proxy below!

- Info icon for the docs of the template

- Settings button for the template

🚀 How do I expose my app to the internet?

- Start your app listening on

0.0.0.0, not127.0.0.1and one of your assigned public ports like11307

Example (Python/Flask):

flask run --host=0.0.0.0 --port=11307

Example (Gradio):

app.launch(server_name="0.0.0.0", server_port=11307)

Example (start a Gradio app from the shell):

python your_app.py --server-name 0.0.0.0 --server-port 11307

Example (Docker):

docker run -p 11307:8080 your-ai-webapp-image

- Use one of your assigned public ports, such as

11307

Your app will be accessible at:

http://connectXX.trooper.ai:11307

⚠️ Can I use port 80 or 443?

No. Direct access to port 80 (HTTP) and 443 (HTTPS) is not available for security reasons.

If you need a secure HTTPS endpoint (e.g. for frontend embedding or OAuth redirect):

👉 You get automatically SSL to any template or contact support to ask for help

This will map your public port (e.g. 11307) to a secure HTTPS endpoint like:

https://ssl-access-id.app.trooper.ai/

This SSL proxy is included with all GPU plans and activated automatically on every template install. Data is securely routed on our internal network, providing enhanced security and ensuring certificates are specific to your GPU Server Blib’s network environment.

🔒 How can I secure my HTTP app?

You have four main options:

-

Use our managed HTTPS proxy (recommended, see next chapter) Automatically deployed on template install. Traffic fully routed in internal network directly to your GPU Server Blib!

-

Run your own reverse proxy (e.g. nginx with Let’s Encrypt) on your public frontend (web)server Note: You’ll still use a non-standard port like

11306on the GPU server. Make sure to support WebSockets if needed. -

Use a tunneling service like SSH tunnel, ngrok or Cloudflare Tunnel This gives you a public HTTPS domain quickly, but makes it difficult to maintain. Use only during rapid development.

-

Communicate via API (industry standard) Communicate only via API from your public server with your GPU server. This can be combined with (1) the HTTPS proxy or (3) the SSH tunnel. This is the industry standard and used by productive services.

How to use our SSL HTTPS Proxy correctly

Our SSL proxy always serves HTTPS on https://<ssl-id>.app.trooper.ai and forwards traffic over our internal network to your app’s port. It auto-detects what you’re doing (regular HTTP, streaming/chat, large downloads, WebSockets) and applies a smart timeout so long jobs don’t get cut off—but idle/broken connections won’t hang forever.

Timeout classes (what you get by default)

| Traffic type | How it’s detected | Proxy timeout |

|---|---|---|

| Regular HTTP request/response | Anything not matched below | 60s (DEFAULT) |

| Chat/Streaming (SSE / NDJSON) | Path contains /api/chat, /chat/completions, /api/generate, /generate, /stream, /events, or Accept: text/event-stream / application/x-ndjson |

10 min (LONG) |

| Large file downloads | Range header, file-like path/extension, Accept: application/octet-stream |

30 min (DOWNLOAD) |

| WebSockets | HTTP upgrade to WS/WSS | No proxy idle timeout |

The proxy also upgrades timeouts dynamically on the response (e.g., if your server sends

206orContent-Disposition: attachment, it bumps to the DOWNLOAD timeout even if the request wasn’t classified as a download initially).

What you should do in your app

For chat/streaming (SSE/NDJSON)

-

Send the right headers so the proxy knows it’s a stream:

- SSE:

Content-Type: text/event-stream - NDJSON:

Content-Type: application/x-ndjson

- SSE:

-

Emit a heartbeat at least every 15–30s (SSE can send a comment line

:\n; NDJSON can push an empty line). This keeps the connection warm during long model runs.

For WebSockets

- Keep ping/pong enabled (typical 20–30s). The proxy won’t kill WS; your app must keep it alive.

For large downloads

- Support HTTP Range requests (resume, partial transfers) and return

206 Partial Contentwhen appropriate. - Add

Content-Disposition: attachmentfor downloadable files. - Use a specific

Content-Type(or let clients request withAccept: application/octet-stream) so the proxy classifies it as a download and applies the longer window.

Quick tests

SSE stream (curl):

curl -N -H "Accept: text/event-stream" https://<ssl-id>.app.trooper.ai/api/stream

NDJSON stream:

curl -N -H "Accept: application/x-ndjson" https://<ssl-id>.app.trooper.ai/generate

Resumable download:

curl -O -H "Range: bytes=0-" https://<ssl-id>.app.trooper.ai/files/big-model.bin

Gotchas (and fixes)

- Long compute with no output: it will be treated as regular HTTP and may hit the 60s idle limit. Fix by streaming partial results/heartbeats (or switch the endpoint to SSE/NDJSON).

- Downloads without file extensions: set

Content-Disposition: attachmentand a properContent-Type, or have the client sendAccept: application/octet-stream. This ensures the 30 min download window.

🧪 How can I test if the port is reachable?

From inside your server:

ss -tlnp | grep :11307

You should see:

LISTEN 0 128 0.0.0.0:11307 ...

From your local machine:

curl http://connectXX.trooper.ai:11307

If the port hangs or times out:

- Make sure your app is running

- Check it listens on

0.0.0.0 - Confirm you’re using a valid assigned port

🧰 Example: Port forwarding with socat

If your app only runs on localhost:8000, you can forward it:

sudo socat TCP-LISTEN:11307,fork TCP:localhost:8000

This exposes localhost:8000 externally as connectXX.trooper.ai:11307.

🔁 Port Remapping and Additional Ports

Public port ranges are automatically assigned and cannot be modified.

If your application requires more ports than the standard allocation (such as for parallel applications or multi-tenant deployments), please contact our support team after reviewing our documentation thoroughly. It is important to understand that GPU servers are not intended to function as public-facing web servers, and we do not offer the ability to open additional public ports.

🧯 Troubleshooting

- ❌ App not reachable: Check if it’s bound to

0.0.0.0 - ❌ Wrong port: Only use ports assigned to your server

- ❌ No HTTPS: Check automatically creation of SSL Proxy during template install

- ❌ Firewall inside container: Disable or open internal rules (don’t use UFW at all!)

- ❌ App crashes immediately: Check logs and system resources

- ❌ UDP connections: We only support stateful TCP connections for security reasons

🧑💻 Example: FastAPI on a public port

import uvicorn

uvicorn.run("main:app", host="0.0.0.0", port=11308)

Access it at:

http://connectXX.trooper.ai:11308

Need HTTPS? You should automatically see the lock icon on your template installs pointing to:

https://ssl-token.apps.trooper.ai/

📎 Useful Links

💬 Still stuck?

We’re here to help. Please prepare these information before contacting us:

- Your Server ID (e.g.

aiXX_trooperai_000XXX) - The port number you’re using

- A screenshot or terminal output showing your issue

- Go to: Support Contacts